Every year the Social Statistics Section of the Royal Statistical Society organizes a memorial lecture in honor of Cathie Marsh. This year the lecture focused on surveys with a provocative title: Is there a future for surveys? Two leading experts with different backgrounds were invited to present their views: Guy Goodman, Chief Executive of NatCen Social Research and Tom Smith, Managing Director of the Data Science campus at ONS.

[expand title=”More about Cathie Marsh“]

She was a teacher and lecturer both at Cambridge University and University of Manchester and she was a strong proponent of the use of quantitative methods in the social sciences. She had a wide-ranging impact in the social sciences in the UK through her students as well as the institutions that continue her work, such as the Cathie Marsh Institute at the University of Manchester. For those of you who want to find out more about Cathie Marsh you can look here.

[/expand]

Here is a little summary and my thoughts on the talks.

Main points from Guy Goodman

Guy Goodman argued that surveys have become unfashionable partly due to a popular view that all our problems will be solved by the new forms of data. For people with such views surveys represent a challenge as they are still popular and important.

He went on to highlight how surveys are still essential and can’t be replaced too soon. One of his examples is the ability to answer the question “Why?” He argued that because of the breath of information available in surveys they are uniquely placed to answer such questions. He also argued that surveys have the power to manage the complexity of life and through their tailored design can investigate most research questions.

Nevertheless he agreed that surveys need to change and that combining multiple sources of data will become more prevalent. New areas where he expects change include the development of new types of surveys, innovations in data collection (e.g., mixed modes and Web surveys) as well as the increase use of linked longitudinal survey and admin data.

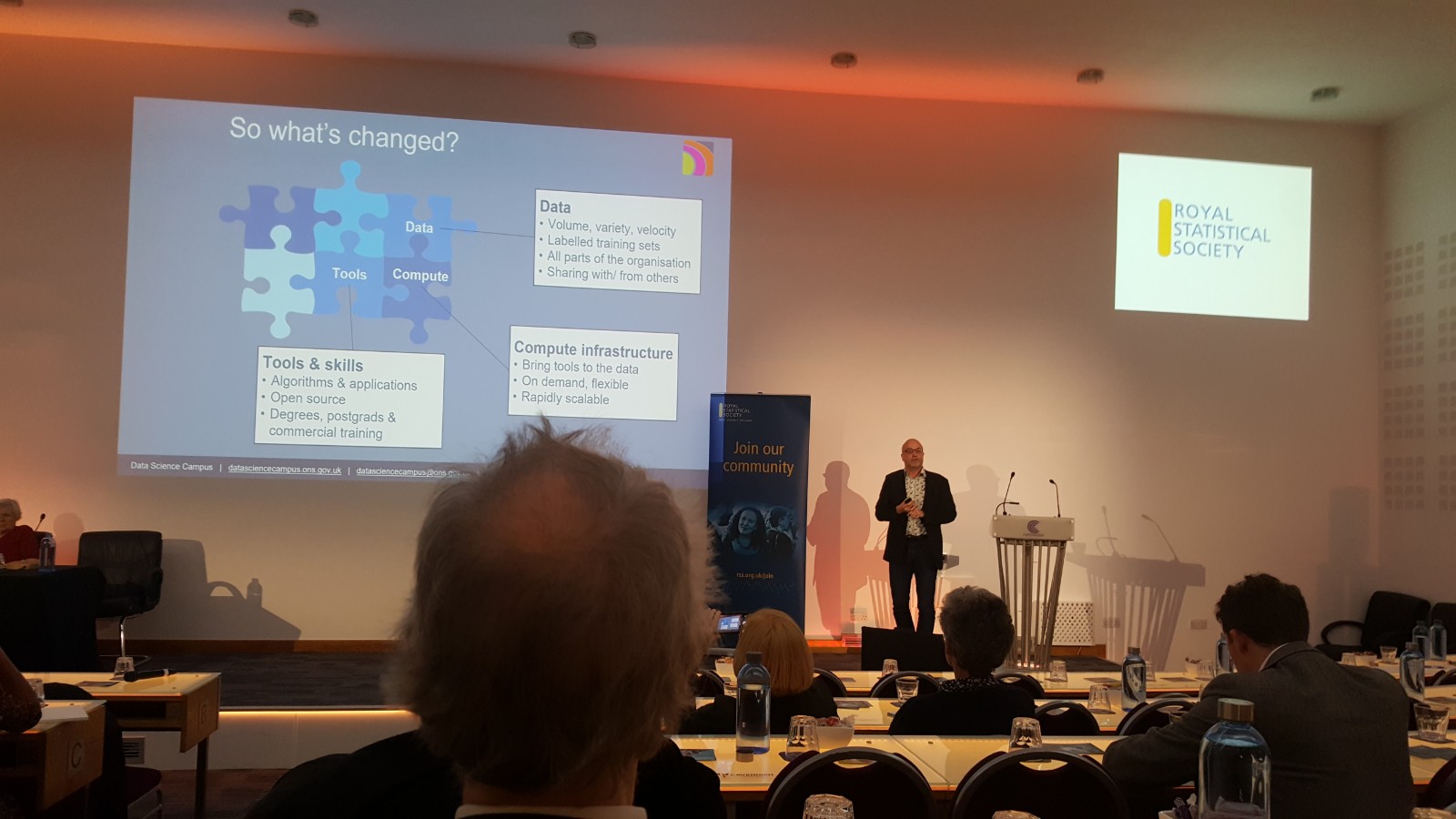

Main points from Tom Smith

Tom Smith started his talk by highlighting that all data sources are biased and that we need multiple types of data to triangulate the truth. In his view the new forms of data bring more “power” (and by “power” I think he means less measurement error).

Throughout the talk he has stated that surveys should be the last resort and their utility is mainly in measuring attitudes and beliefs and “filling in the gaps”. He supports the use of admin data as the main data source and as a “data spine” to which different forms of data are later added.

Throughout the talk he highlighted some of the new things that we are able to do with new forms of data, such as estimating monthly GDP, investigating inefficiencies in the NHS or doing a “census” of green space in the UK. Some of this new research can have important policy implications. For example, being able to change policies based on monthly GDP could have saved the UK 12 billion pounds while the NHS research could potentially save 580 million pounds.

Finally, he also highlighted that while the census clearly contributes to the wealth of the UK it should nevertheless be replaced by administrative data due to cost reasons.

Some thoughts

There were also a few interesting comments and questions at the end. One of them asked about ways to develop trust in this new data environment. Guy and Tom had both very good (and different) perspectives on this. Guy highlighted the need to build trust in institutions that publish data and analyses. On the other hand Tom mentioned the potential of the open source model as a solution for insuring quality and tackling new challenges.

It seems that the two speakers agreed on most of the issues although there were obvious differences of emphasis. For example, while they both highlighted the need for integration of multiple types of data I think they had very different perspectives from where to start. Tom clearly thought that admin data together with other sources should be prefered to surveys, the latter being only a last resort. On the other hand, Guy sees surveys as the bedrock of future research due to the wealth of data that they collect and their tailored nature.

Guy also highlighted that often the new forms of data are collected with high frequency (e.g., continuous stream of social media data) but some things don’t need to be measured at that level. He gave an example of attitudes and how surveys are well equipped to measure their slow change in time. On the other hand Tom showed some examples where more frequent data collection can have actually significant benefits (e.g., monthly GDP). I think the ability to collect data at a faster rate will also make us a little more creative and give ideas on how to best make use of it.

There was another interesting idea floated around: the use of pre-populated surveys. If I understood correctly that would imply using admin data and other information to fill in part of the survey and ease the burden on the respondent. This is similar to some of the things done in longitudinal surveys with previous wave information being used as the default value for some questions. While this might be an interesting idea I do have some concerns about the potential reaction of respondents once they find out all the things that the survey organization knows about them (before asking them any questions). It will be interesting to see how this idea develops and is implemented.

There was another interesting idea floated around: the use of pre-populated surveys. If I understood correctly that would imply using admin data and other information to fill in part of the survey and ease the burden on the respondent. This is similar to some of the things done in longitudinal surveys with previous wave information being used as the default value for some questions. While this might be an interesting idea I do have some concerns about the potential reaction of respondents once they find out all the things that the survey organization knows about them (before asking them any questions). It will be interesting to see how this idea develops and is implemented.

Lastly, there was quite some discussion about quality and how to insure it. I was not really convinced by how Tom started the talk, saying that all data is biased. While I agree with him there surely is a matter of degree here. Saying that “all data is biased” sounds very similar to saying “all data is equally biased” and we know that’s not true. A good example is the recent Guardian article that compared incomes across countries using Revolute data. The data used in that article has probably more bias than official statistics or high quality surveys. While less exciting, using traditional methods with proven quality would have been prefered in this case. A better use of such transaction data would have been to investigate research questions that are harder to answer with surveys, such as frequency of transactions throughout the day/week/month.

Overall these were two interesting talks. Unfortunately less controversial than what I would have expected, the two speakers agreed on most things discussed. If you want to read a different view on the same topic I recommend the excellent keynote Mick Couper had for the ESRA conference a few years ago. It is now published as a paper (it’s open access here).

I’ll try to end on a positive note (for survey methodologists). While survey research is going to face a number of new challenges “we are always going to need to ask people questions” (Matthew J. Salganik). Big data is going to make surveys more valuable not less.

“Linking surveys to big data sources enables you to produce estimates that would be impossible with either data source individually”. Matthew J. Salganik

You can see the full video of the lecture bellow. You can also have a look at the previous lectures underneath.

Past lectures

[expand title=”Show videos“]

2017 – How unequal is the UK – and should we care?

2016 – Statistics in the EU referendum

2015 – The failure of polls and the future of survey research

2013 – What can RCTs bring to social policy evaluation?

[/expand]