In recent years web surveys and mixed mode designs (combining multiple modes of interview in the same survey) have become increasingly popular. This is the result of multiple push factors such as higher costs of data collection, low response rates, high penetration of the internet and the need for timely data.

However, one of the big unknowns related to this transition is regarding comparability of measures. Are answers to web surveys the same as face to face ones? If not, what are the implications for comparability within mixed mode designs? Can we compare estimates across time?

This mode shift is of concern especially for longitudinal data. This is because estimates of change, aggregate or individual, can be biased by the shift in mode of interview. For example, what might appear as changing attitudes towards immigration could just reflect a shift to web data collection.

One reason why this question is still open is that comparing modes is difficult. This is because we typically have a combination of mode effects on measurement, for example people tend to be more honest in self-completion modes, and selection effects, younger respondents may prefer to answer by mobile compared to older respondents. Separating these two processes can be difficult.

Web surveys versus face to face: estimating mode differences in measurement

Therefore we investigated this issue in a recent article written with Melanie Revilla. We use an innovative data collection done by the European Social Survey (ESS). The experiment, called the CROss-National Online Survey (CRONOS), invited respondents of the ESS to be part of a web panel. In addition, if they did not have internet or a computer they received a tablet with internet. The design was implemented in Estonia, Slovenia and the United Kingdom.

CRONOS includes some questions that are also asked using face to face in the main ESS survey. As a result, it is possible to compare web and face to face answers to the same questions from the same respondents. Additionally, it can give us an estimate of mode effect on measurement without the normal issues of self-selection.

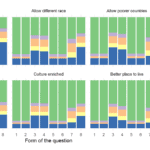

To understand if the two interview modes influence measurement we investigate a number of data quality indicators. These included: item non-response, non-differentiation (always selecting the same response categories), primacy and recency (tendency to select the first or last category) and individual differences in answers.

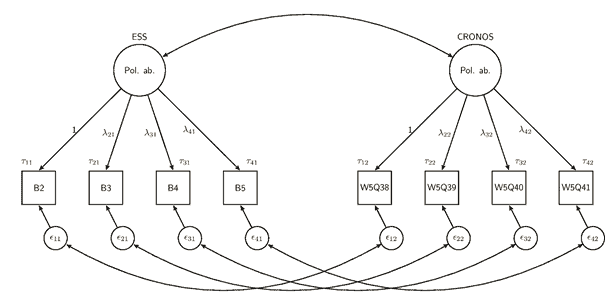

We also investigated if the concepts of interest are measured in the same way using latent variable modeling. We use measurement equivalence (also known as invariance or DIFF testing) to see if the two modes can measure social concepts in the same way.

Are web surveys different?

Although overall there are small amounts of question missing data respondents tended to avoid an answer more often when answering online. We also see more primacy and recency when respondents answer online.

Looking at each question in turn we find few differences in the answers given face to face and online. When we try to explain the individual differences in answers only 17 out of the 198 effects considered are statistically significant. Moreover, there is no systematic pattern for the significant effects. Thus, it seems that none of the variables considered can explain individual differences in data quality across modes.

Lastly, we look at the comparability of social concepts across modes. Here we see mixed results. They suggest that we can be quite confident in comparing unstandardized relationships between web and face to face. Nevertheless, we are somewhat less confident in comparing averages.

Practical implications

Overall the results suggest that web and face-to-face answers are comparable. In practice, it means that using a web panel as a follow-up to a face-to-face survey and then combining the data from both seems feasible. Also, it might indicate that a switch to web or mixed-mode may not have large detrimental effects on comparisons.

Overall this is a very positive result for survey methodologists and users of the ESS. Nevertheless, researchers should continue to be vigilant and do sensitivity checks to see if the modes of interview influence results. At the same time methodologists should continue their work to minimize mode effects and to understand the processes that make them happen.

Want to learn more about this research? Check out the original paper:

Cernat, A., & Revilla, M. (2020). Moving from Face-to-Face to a Web Panel: Impacts on Measurement Quality. Journal of Survey Statistics and Methodology. https://doi.org/10.1093/jssam/smaa007

Also, check out an upcoming free webinar where I will be discussing about this study and more: